In defense of stupid charts

Here at The Null Hypothesis, we acknowledge the complexity of the world and celebrate the language and tools that science provides to help us understand that complexity. That is why we respect those with a mastery of that language and call out those who misuse and abuse it:

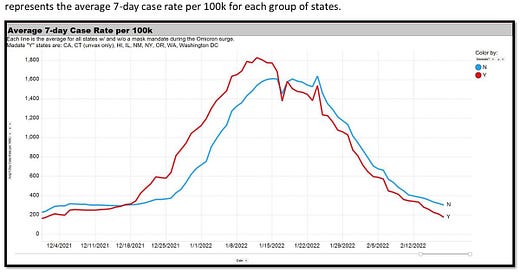

When we think “science”, we often think of big words and phrases like “confounding variables” or “statistical significance” or “case-control study”, because those are the sorts of terms that appear in scientific publications. But what you see in a published study is merely the end result of research, not the research itself. Sometimes, for a scientist to get a foothold on a multifaceted and difficult problem like “do masks stop COVID-19”, they need to throw away all that fancy jargon and just ask the available data the most basic possible question. Namely: did the places with mask mandates have less COVID? Torrey Jaeckle answers, using data from the US CDC:

Now, those with a conviction that masks do work against COVID, like I had a few months ago, understandably look at this chart, and others like it, with some skepticism. They point out that:

states without mask mandates may be underreporting COVID (perhaps, but wouldn’t a restriction that actually slows the spread affect the duration of the case wave and not just its magnitude, à la “flatten the curve”?)

some people didn’t obey the mask mandates (perhaps, but if masking some people didn’t make any difference at all, why do you expect that masking everyone would?)

there are other differences between masked and unmasked states that were not controlled for in the analysis (perhaps, but what are they? And where were you when major news outlets pushed for masks by comparing Japan to the US?)

All of these criticisms point toward the same idea: that the chart is, for lack of a better word, stupid. It uses case counts, which are notoriously fraught with noise and potential bias. It makes no attempt to discern how many people in the masked states actually followed the mandate. It ignores confounding variables, like vaccination.

And they’re right: the chart absolutely is stupid. You don’t need formal training in data visualization to make it; you just need enough knowledge of Excel. You don’t need any special insight to think of making it, either; you just need curiosity and a willingness to ask the most primitive possible question.

Because of the aforementioned deficiencies, the chart certainly doesn’t settle the question of whether masks have an effect on COVID. It does cast extreme doubt on some of the more egregious claims about masks, like the early predictions of 67%+ efficacy against infection, or the recent CDC infographic which reports an odds ratio of 0.44.

But if Jaeckle’s stupid chart were the only real-world data we had, it’d still be reasonable to believe that masks are maybe 10% or 20% efficacious. The chart is only the starting point for our research. The next steps would be to look at cases at the county level, use a metric other than cases, look at historical mask mandate data rather than recent data, or use statistical methods to control for confounding variables. (Jaeckle himself does some of this in the thread.)

Many are capable of making the same chart Jaeckle did. In fact, the idea of the chart is so simple that if you are American, curious about how mask mandates affect COVID spread, and able to get your hands dirty with the data, it’s difficult not to make it. But most of us do not make Jaeckle’s chart because we cannot accept that it will be stupid. We feel that data this complex must be left to the experts: those people that know how to do a “real” and “proper” analysis.

That kind of deference leads to studies like this.

The study, by Huang et al., comes to the conclusion that mask mandates are staggeringly effective:

“On average, the daily case incidence per 100,000 people in masked counties compared with unmasked counties declined by 23 percent at four weeks, 33 percent at six weeks, and 16 percent across six weeks postintervention.”

The study uses over 10 different data sources, taking into account COVID-19 case incidence, county mask mandates, wet-bulb temperature, population density, poverty levels, presidential election voting patterns, diabetes rates, and more. It models disease transmission using cubic B-spline functions with no interior knots, using the method of Anne Cori to produce estimates of the instantaneous reproduction number. All this data is then cooked up into generalized linear mixed effects models using a log link function while adjusting for covariates. Basically, it’s all incredibly clever.

Someday I may examine the study more deeply and write an article about it (Dr. Vinay Prasad has already pointed out its more serious flaws), but today I want to make a metascientific point about the value of a stupid analysis like Jaeckle’s versus a smart analysis like Huang et al.’s.

We are biased toward smart analyses, perhaps because their intimidating vocabulary makes them sound authoritative or because their authors are necessarily highly credentialed and intelligent. We refer to Jaeckle’s chart as “just an observation”, but call Huang et al.’s study “actual science”. Peer-reviewers of scientific journals have the same tendency to elevate the complex and disparage the simple. But there are several reasons we ought to be doing just the opposite. I don’t mean to say that there is no value in the sophisticated methods of Huang et al., but they should be used and interpreted with an extreme caution that I have rarely seen in discussions of COVID-19 in the past two years.

Smart analyses shouldn’t be necessary

On Episode 16 of Series 7 of the trivia panel show Quite Interesting (QI), panelists discussed the age-old question of whether wearing vertical stripes or horizontal stripes makes you look slimmer.

This resulted in the following exchange between host Stephen Fry and guest David Mitchell:

Stephen: [R]esearch from a man called Dr. Peter Thompson, of York University, has discovered that the large majority think the one in the vertical stripe is larger than the one in the horizontal stripe, when they are the same size.

David: Surely this shows, actually, that it makes no real difference at all, because we’re determining whether wearing vertical or horizontal stripes makes you look thinner, and you can’t tell by looking. You have to do research. The difference is so slight you have to do research with hundreds and hundreds of people.

David’s point is more pertinent now than ever. Dr. Thompson only had to carry out his smart research because the easy method of using your own eyes didn’t show any difference at all. Likewise, Huang et al. only had to use cubic B-spline functions because all the easy comparisons regarding mask mandates failed to demonstrate their efficacy.

That doesn’t mean anything is necessarily wrong with the study. I am merely sharing what I believe is a valid observation about the study’s existence.

Smart analyses are plagued by analytical flexibility

I have spoken briefly about p-hacking in this newsletter before. It is the practice of analyzing the same data in many different ways in order to find a statistically significant result. p-hacking is cheating, and smart studies are especially prone to it.

Huang et al. had a wealth of analytic flexibility. They could have included average age or body mass index as a covariate, or excluded poverty or diabetes rates. They could have used a different measure of social distancing. They could have used one, some, or all of the covariates in the process of matching counties. They could have chosen not to exclude days with an Rt value outside of the 2.5–97.5 percentile range (this, in particular, was a strange choice, given how important high Rt values are to the spread of COVID), or used a different percentile range. They could have used a different method for estimating the Rt values. They could have chosen a different end date than August 2020.

The choices the authors made are not the obvious “correct” choices. There are millions of equally valid ways that this study could have been carried out. With that many choices, it should not be surprising at all that Huang et al. were able to find just one (two or three if you count the sensitivity analyses) that makes mask mandates look effective. Of course, the code for the study is not public, so there is no way for you or I to know what happens if you analyze the data slightly differently.

The only realistic way to fix this issue for a study as smart as this one is to use preregistration. That is, have the authors declare exactly how they plan to analyze the data before they have access to it. Usually, this requires that the study is prospective, which Huang et al.’s was not.

Jaeckle’s stupid chart does not have the same problem. There are maybe four or five, not millions, different questions you could ask that are as natural as “did the states that had mask mandates during the Omicron wave have less COVID cases per population?”.

Smart analyses thrive on their inaccessibility

The Huang et al. study has been tweeted 1300 times and mentioned by 51 news outlets.

Exactly zero of those 51 news outlets had a single employee that actually read the study. Yes, this is just a guess, but it is one that I would bet any amount of money on. The idea that anyone at Action 10 News or WWSB actually had the time and expertise necessary to appraise this research is so absurd that thinking about it is hurting my head. As for the 1300 people who tweeted the study, maybe one or two of them read it.

That is not good. The entire point of an academic paper is to communicate ideas. A paper that no one can read, or that no one bothers reading, has no value at all. Yes, I know it’s easier and more satisfying to just skip to the conclusions section and then complain on social media about how not wearing a mask is murder. But if your goal is to reach a solid understanding of the evidence, that is no longer acceptable.

You may be inclined to simply trust the conclusion because it has been peer-reviewed or because it’s in Health Affairs. I felt that way too until I started actually reading these things. My series on the duplicity of mask science, which I admit is not long at this particular moment, is a record of major deficiencies that the peer reviewers didn’t catch, like the glaring definitional inconsistency of the Bangladesh mask trial. eugyppius writes more on this topic.

The very fact that the study is smart is what makes it so inaccessible. Whether intentionally or not, the jargon obscures the science and renders the study impenetrable for non-experts. A major reason this newsletter exists is to help bridge the knowledge gap that lets studies like this go unchallenged.

Criticizing the metascience of Huang et al., without deeply engaging with its substantive claims, makes me feel a little dirty. As mentioned earlier, perhaps I’ll rectify that in a future article. For now, though, I wanted to illustrate the dangers of gravitating toward the esoteric.